I received my Ph.D. degree from Nanyang Technological University, under the supervision of Prof. Lu Shijian. My research focuses on computer vision and machine learning, particularly in areas of transfer learning and multi‐modal learning such as unsupervised domain adaptation and vision‐language models.

Before my Ph.D. study, I received my B.Sc. degree in electronic information science and technology from the University of Electronic Science and Technology of China (UESTC) and M.Sc. degree in signal processing from the Nanyang Technological University (NTU).

🔥 News

- 2025.10: Our new survey paper on agentic MLLMs is released!

- 2025.09: Our papers Mulberry and R1-ShareVL are accepted by NeurIPS 2025! Mulberry is selected as a Spotlight! 🎉

- 2025.08: Our survey paper on Visual Instruction Tuning is accpected by IJCV!

- 2025.06: Two papers are accepted by ICCV 2025!

- 2024.09: Two papers are accepted by NeurIPS 2024!

📝 Publications

2025

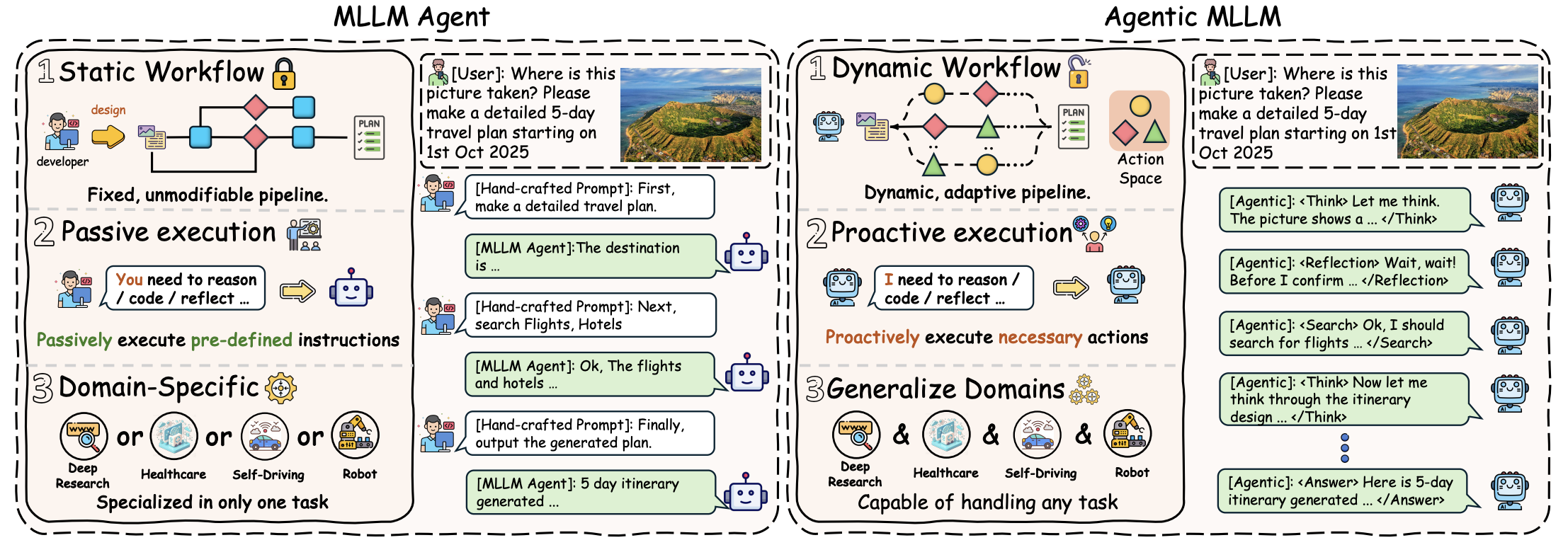

A Survey on Agentic Multimodal Large Language Models

Huanjin Yao, Ruifei Zhang, Jiaxing Huang , Jingyi Zhang, Yibo Wang, Bo Fang, Ruolin Zhu, Yongcheng Jing, Shunyu Liu, Guanbin Li, Dacheng Tao

arXiv 2025

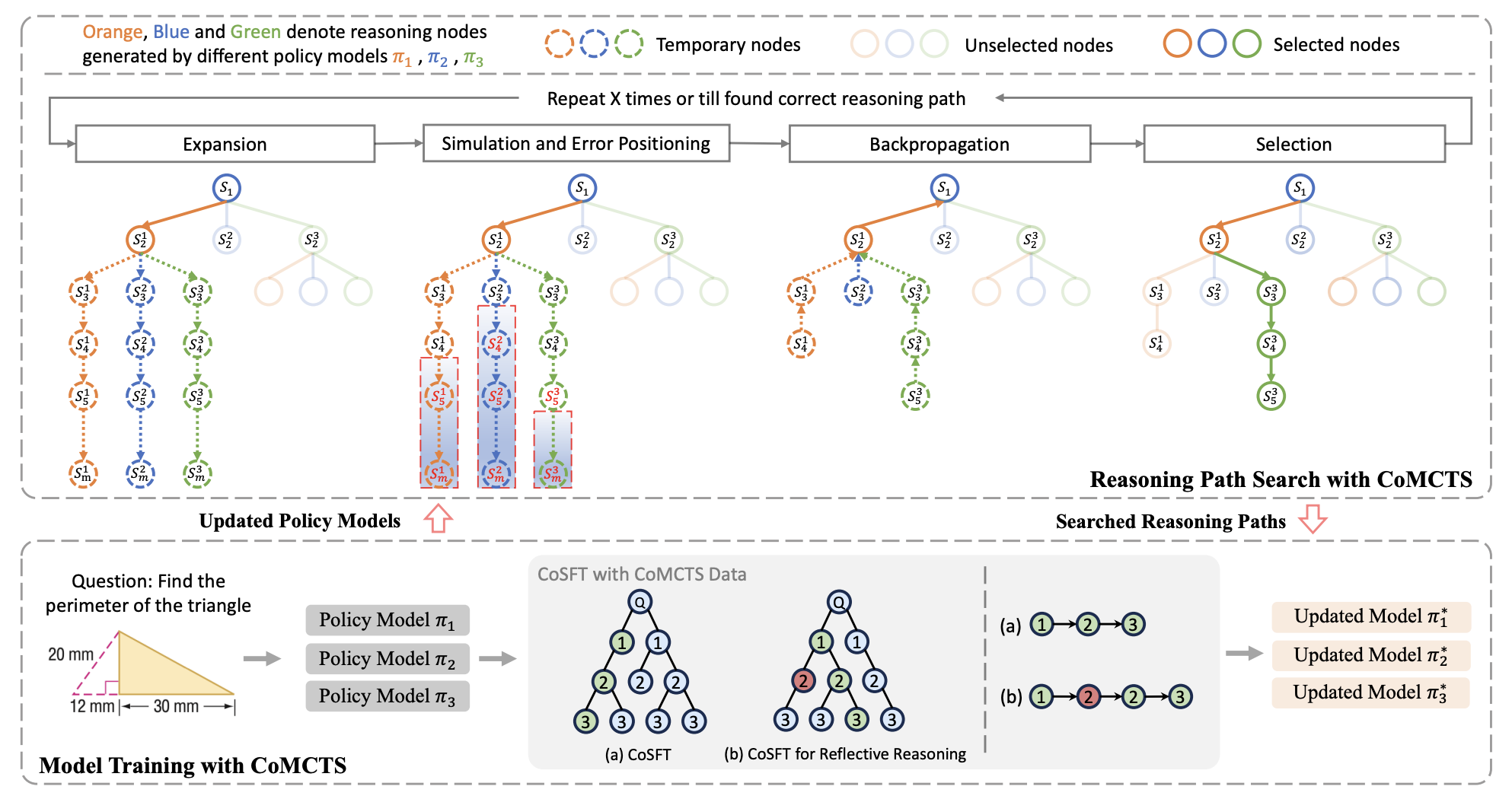

Huanjin Yao, Jiaxing Huang, Wenhao Wu, Jingyi Zhang, Yibo Wang, Shunyu Liu, Yingjie Wang, Yuxin Song, Haocheng Feng, Li Shen, Dacheng Tao

NeurIPS 2025 (Spotlight)

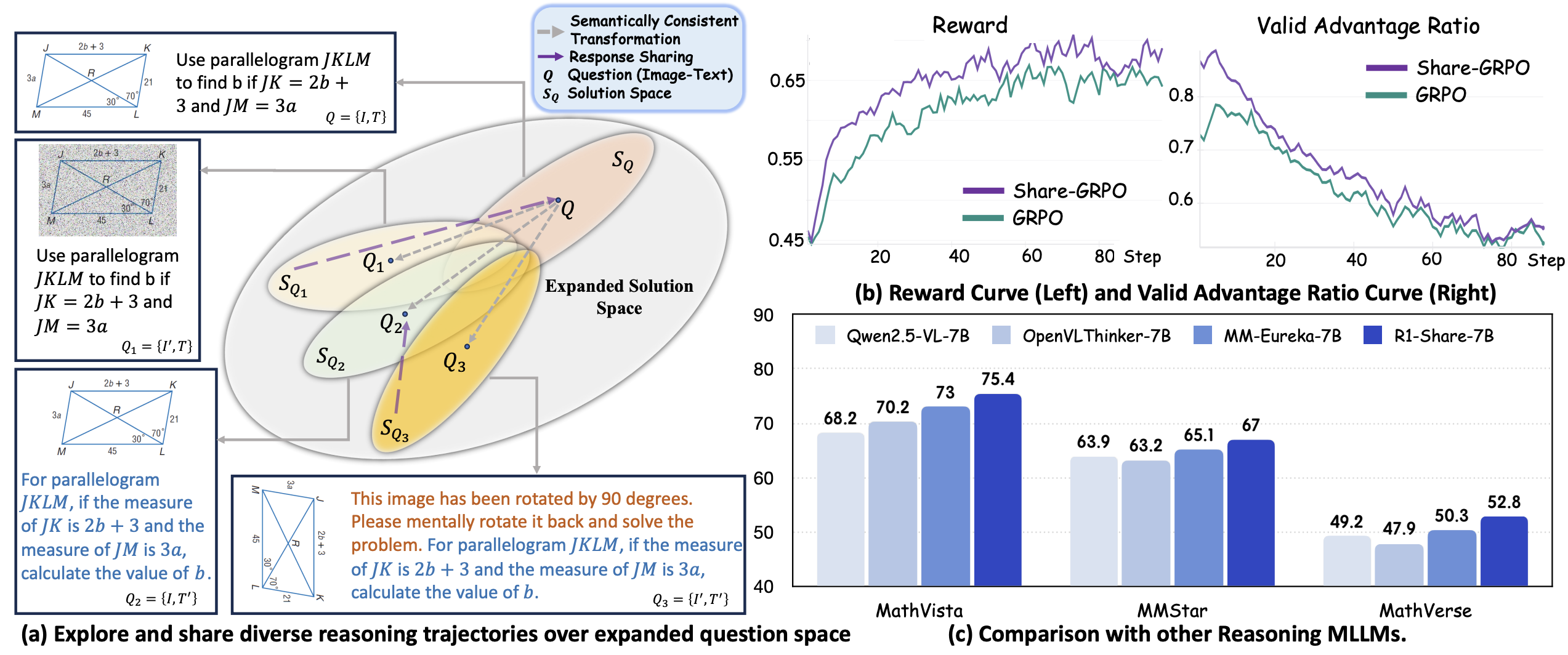

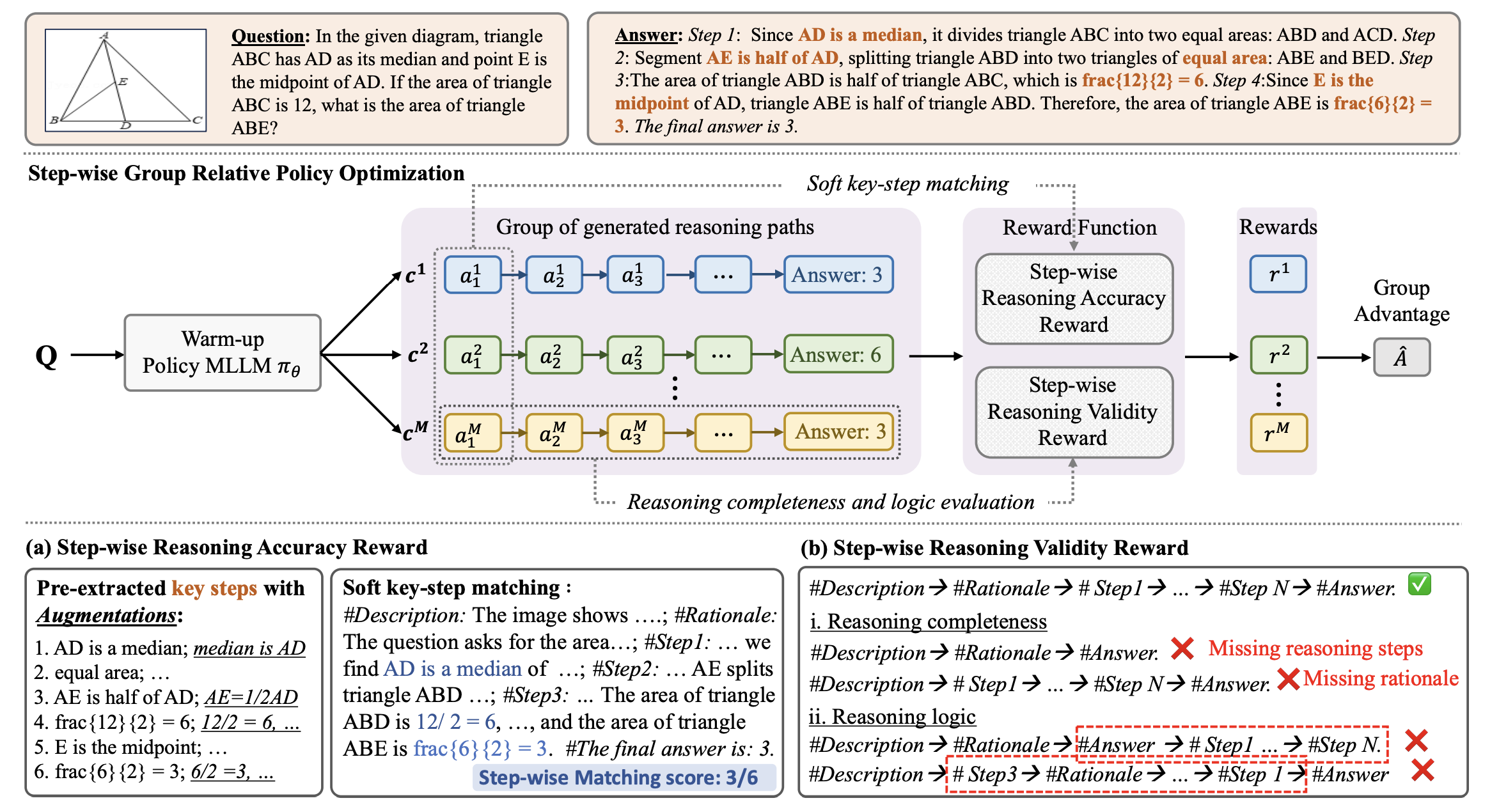

R1-ShareVL: Incentivizing Reasoning Capability of Multimodal Large Language Models via Share-GRPO

Huanjin Yao, Qixiang Yin, Jingyi Zhang, Min Yang, Yibo Wang, Wenhao Wu, Fei Su, Li Shen, Minghui Qiu, Dacheng Tao, Jiaxing Huang

NeurIPS 2025

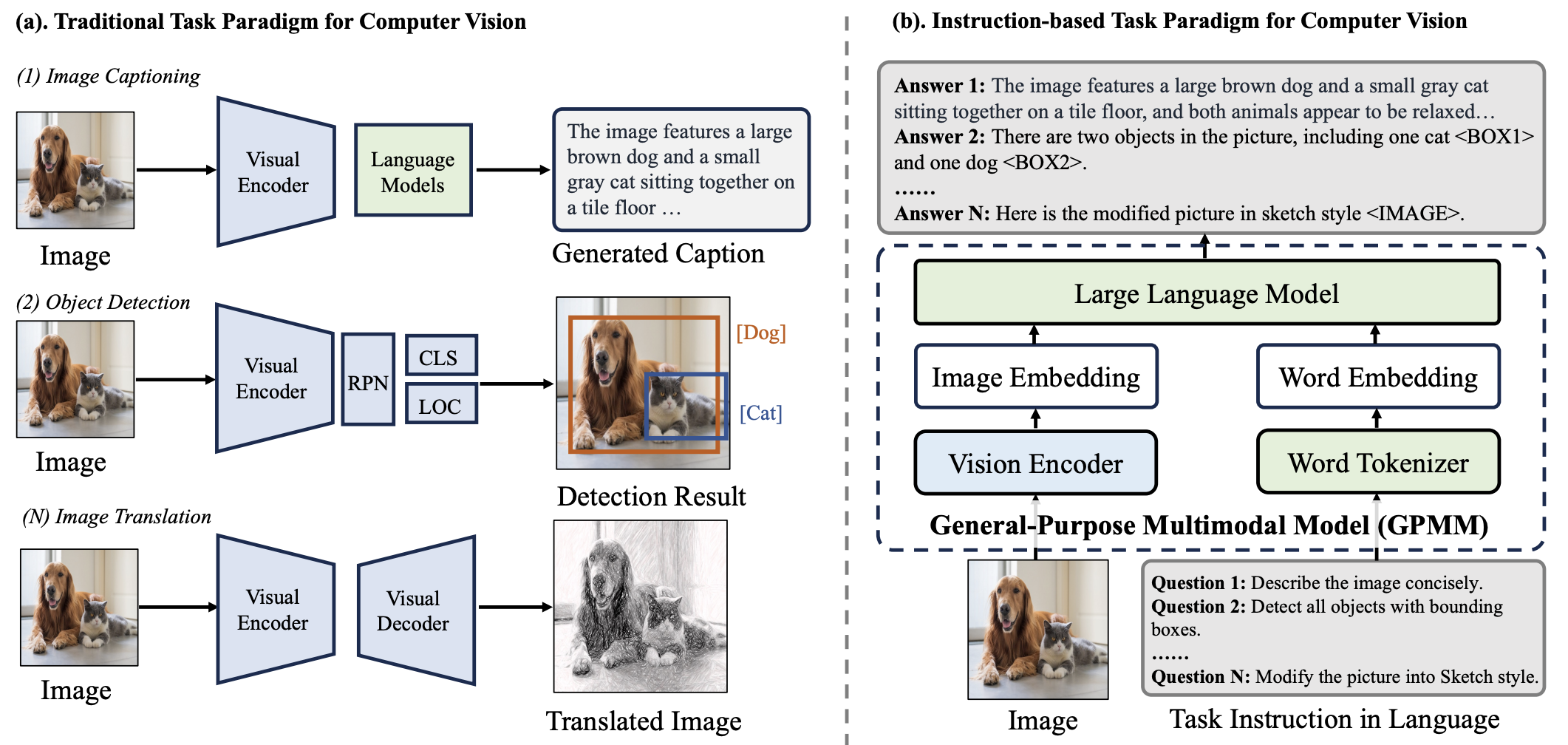

Visual Instruction Tuning towards General-Purpose Multimodal Model: A Survey

Jiaxing Huang, Jingyi Zhang, Kai Jiang, Han Qiu, Shijian Lu

IJCV 2025

Jingyi Zhang, Jiaxing Huang, Huanjin Yao, Shunyu Liu, Xikun Zhang, Shijian Lu, Dacheng Tao

ICCV 2025

MMReason: An Open-Ended Multi-Modal Multi-Step Reasoning Benchmark for MLLMs Toward AGI

Huanjin Yao, Jiaxing Huang, Yawen Qiu, Michael K Chen, Wenzheng Liu, Wei Zhang, Wenjie Zeng, Xikun Zhang, Jingyi Zhang, Yuxin Song, Wenhao Wu, Dacheng Tao

ICCV 2025

2024

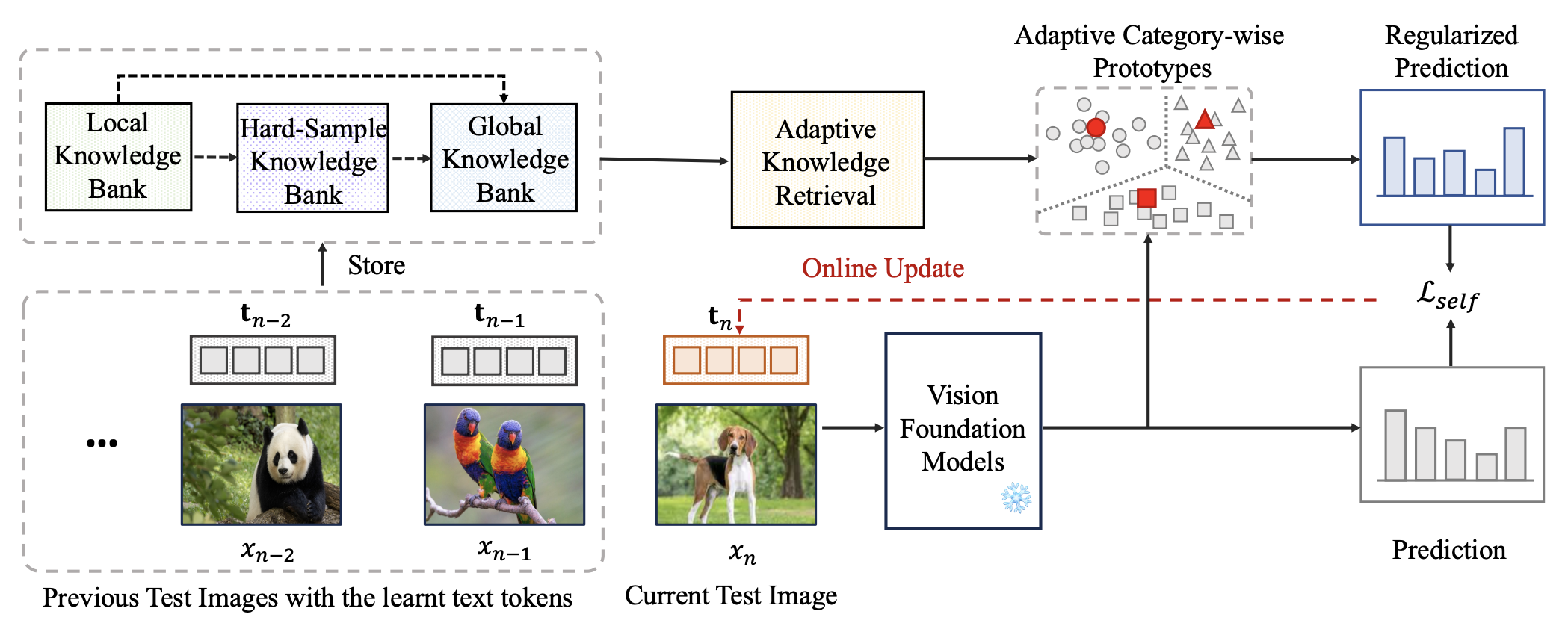

Historical Test-time Prompt Tuning for Vision Foundation Models

Jingyi Zhang, Jiaxing Huang, Xiaoqin Zhang, Ling Shao, Shijian Lu

NeurIPS 2024

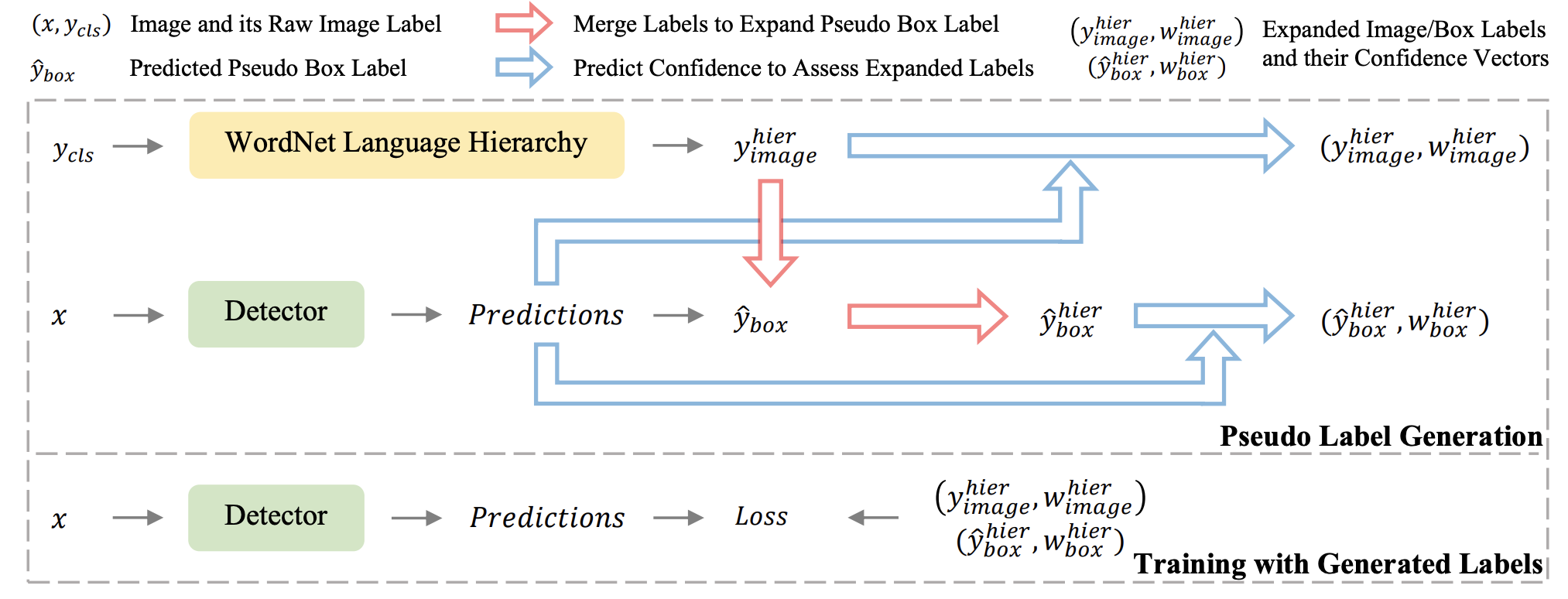

Open-Vocabulary Object Detection via Language Hierarchy

Jiaxing Huang, Jingyi Zhang, Kai Jiang, Shijian Lu

NeurIPS 2024

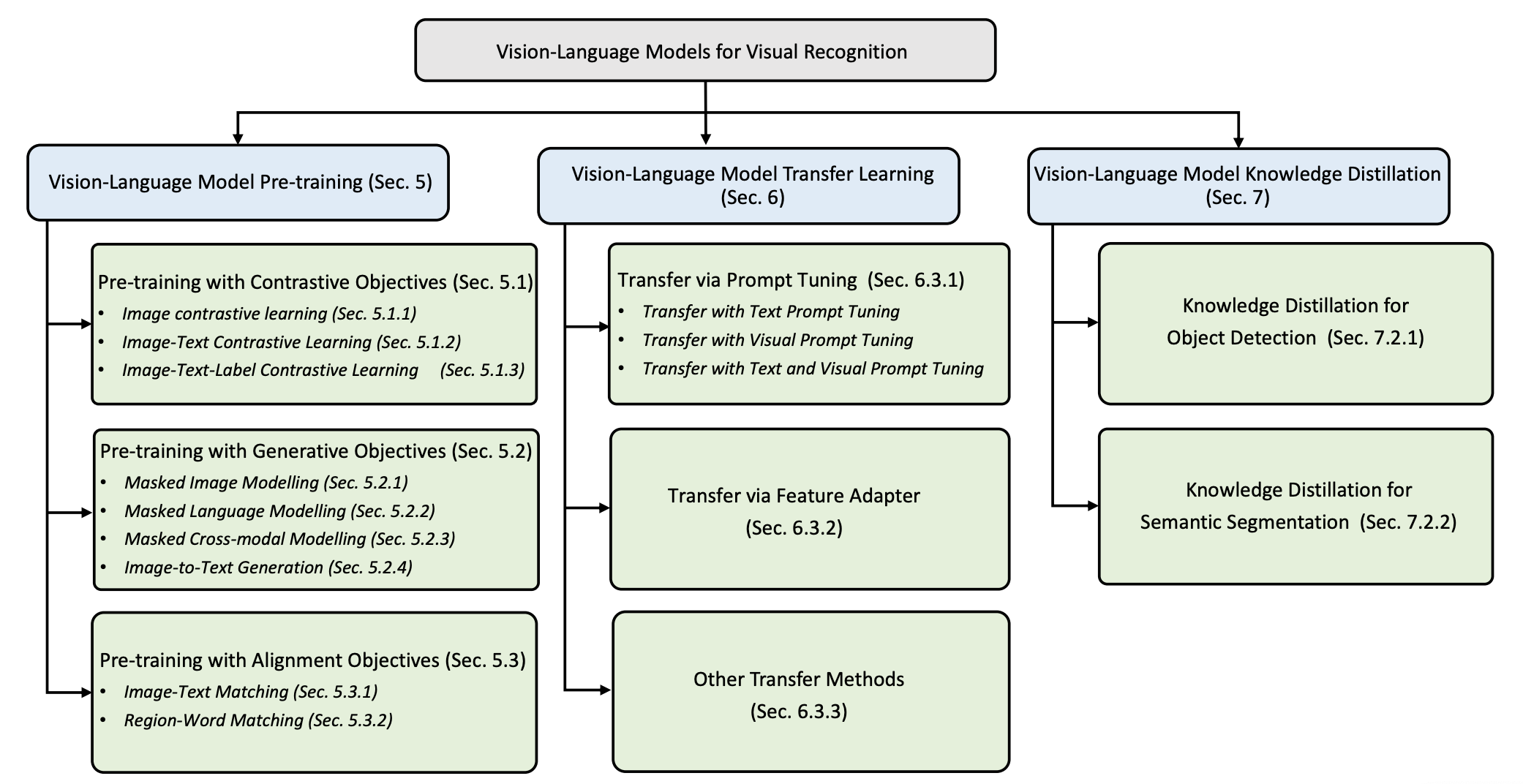

Vision-Language Models for Vision Tasks: A Survey

Jingyi Zhang, Jiaxing Huang, Sheng Jin, Shijian Lu

TPAMI 2024 (Top 50 popular paper)

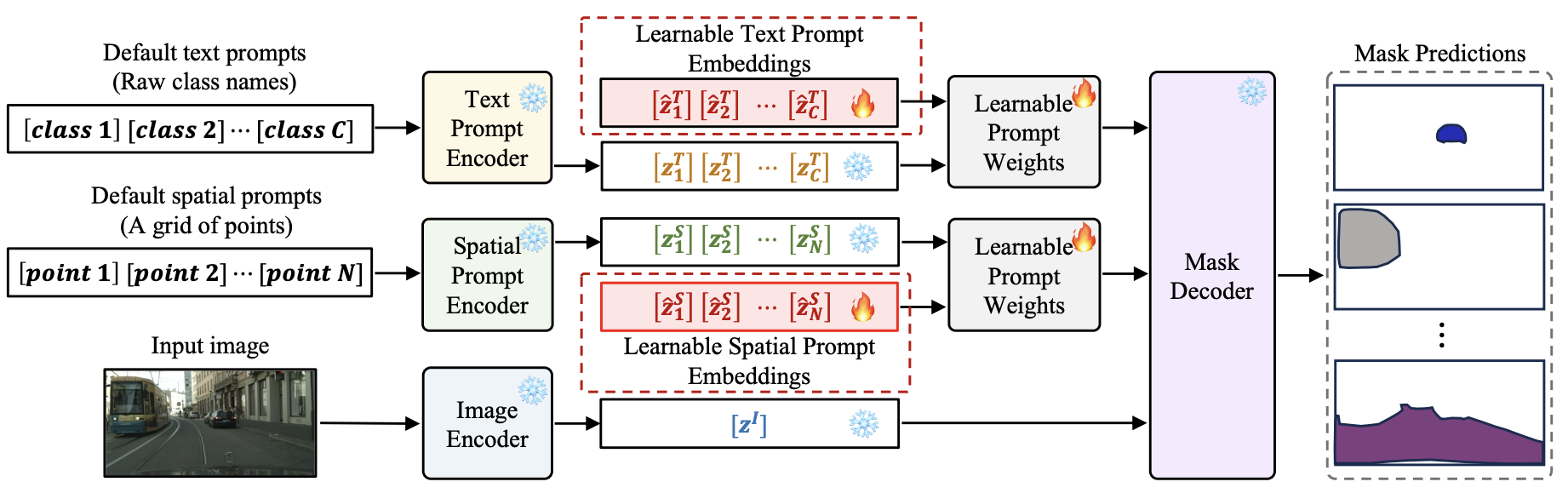

Learning to Prompt Segment Anything Models

Jiaxing Huang, Kai Jiang, Jingyi Zhang, Han Qiu, Lewei Lu, Shijian Lu, Eric Xing

Arxiv

2023

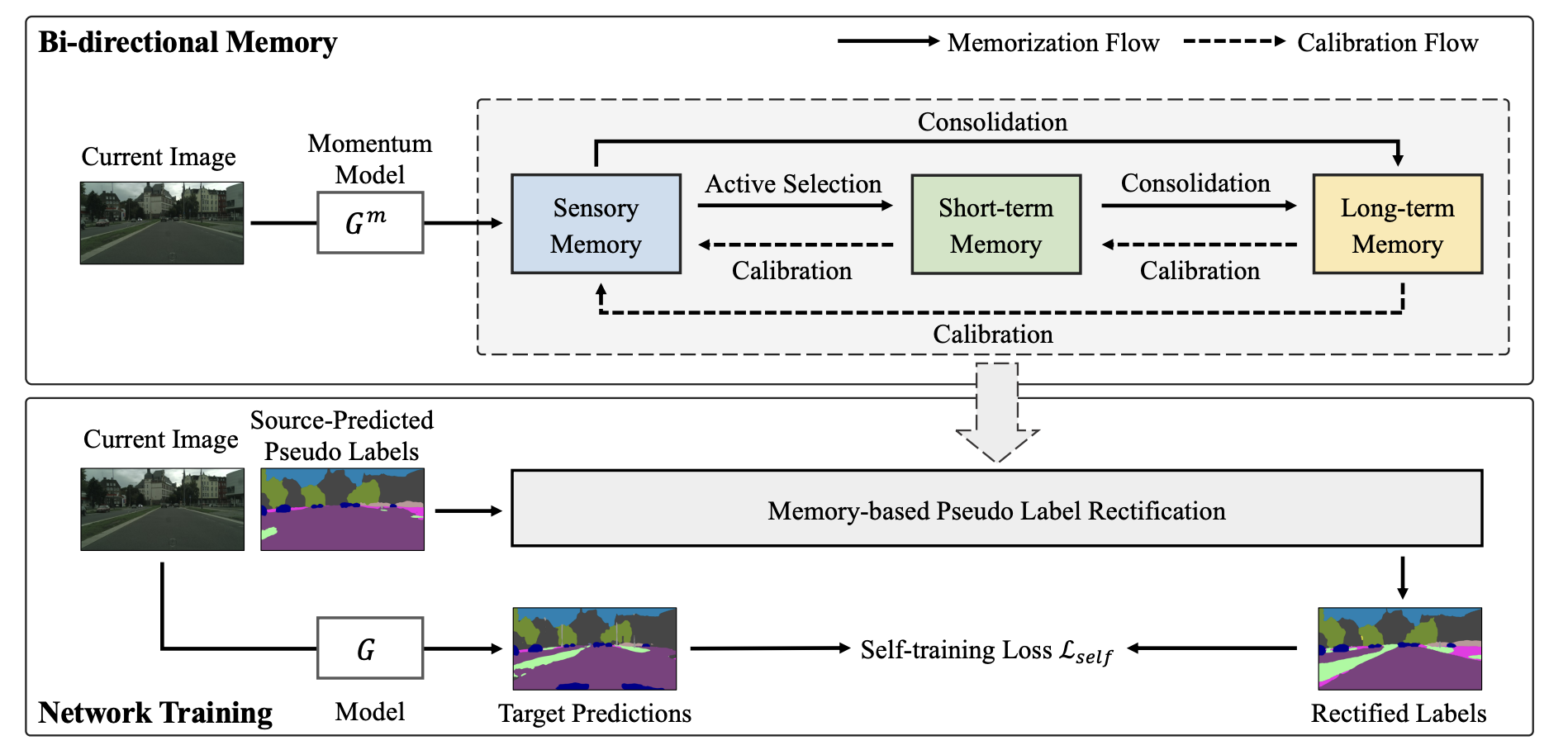

Black-box Unsupervised Domain Adaptation with Bi-directional Atkinson-Shiffrin Memory

Jingyi Zhang, Jiaxing Huang, Xueying Jiang, Shijian Lu

ICCV 2023

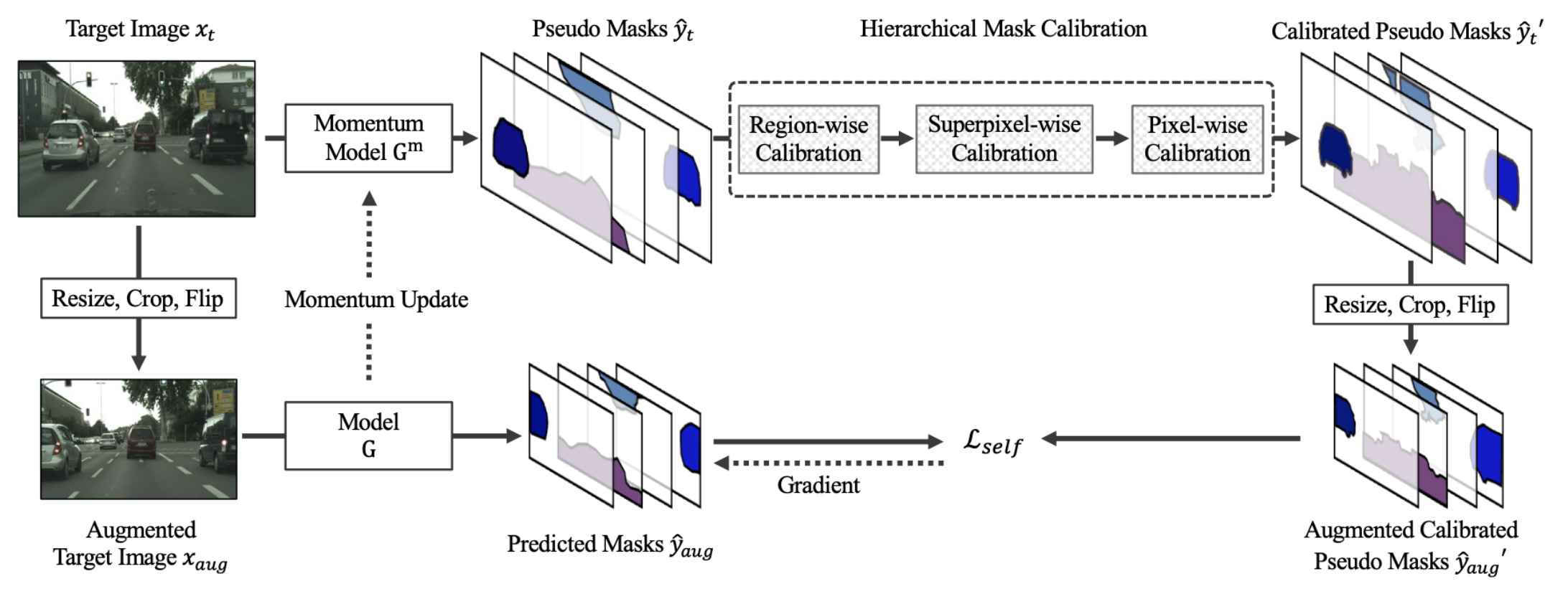

Jingyi Zhang, Jiaxing Huang, Xiaoqin Zhang, Shijian Lu

CVPR 2023

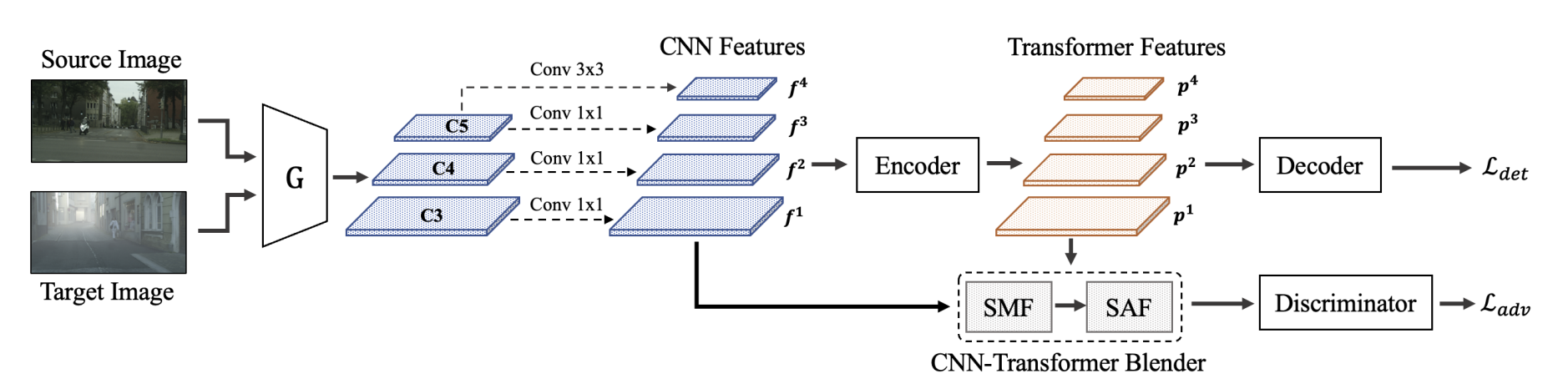

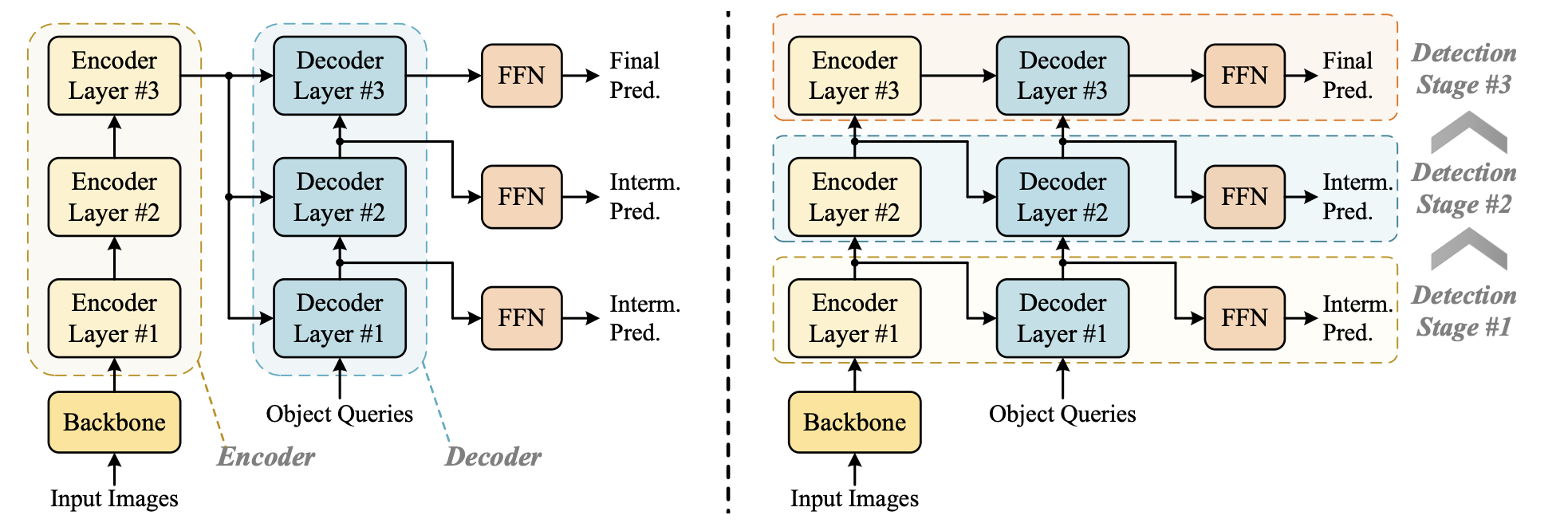

DA-DETR: Domain Adaptive Detection Transformer with Information Fusion

Jingyi Zhang, Jiaxing Huang, Zhipeng Luo, Gongjie Zhang, Xiaoqin Zhang, Shijian Lu

CVPR 2023

Towards Efficient Use of Multi-Scale Features in Transformer-Based Object Detectors

Gongjie Zhang, Zhipeng Luo, Yingchen Yu, Zichen Tian, Jingyi Zhang, Shijian Lu

CVPR 2023

2022

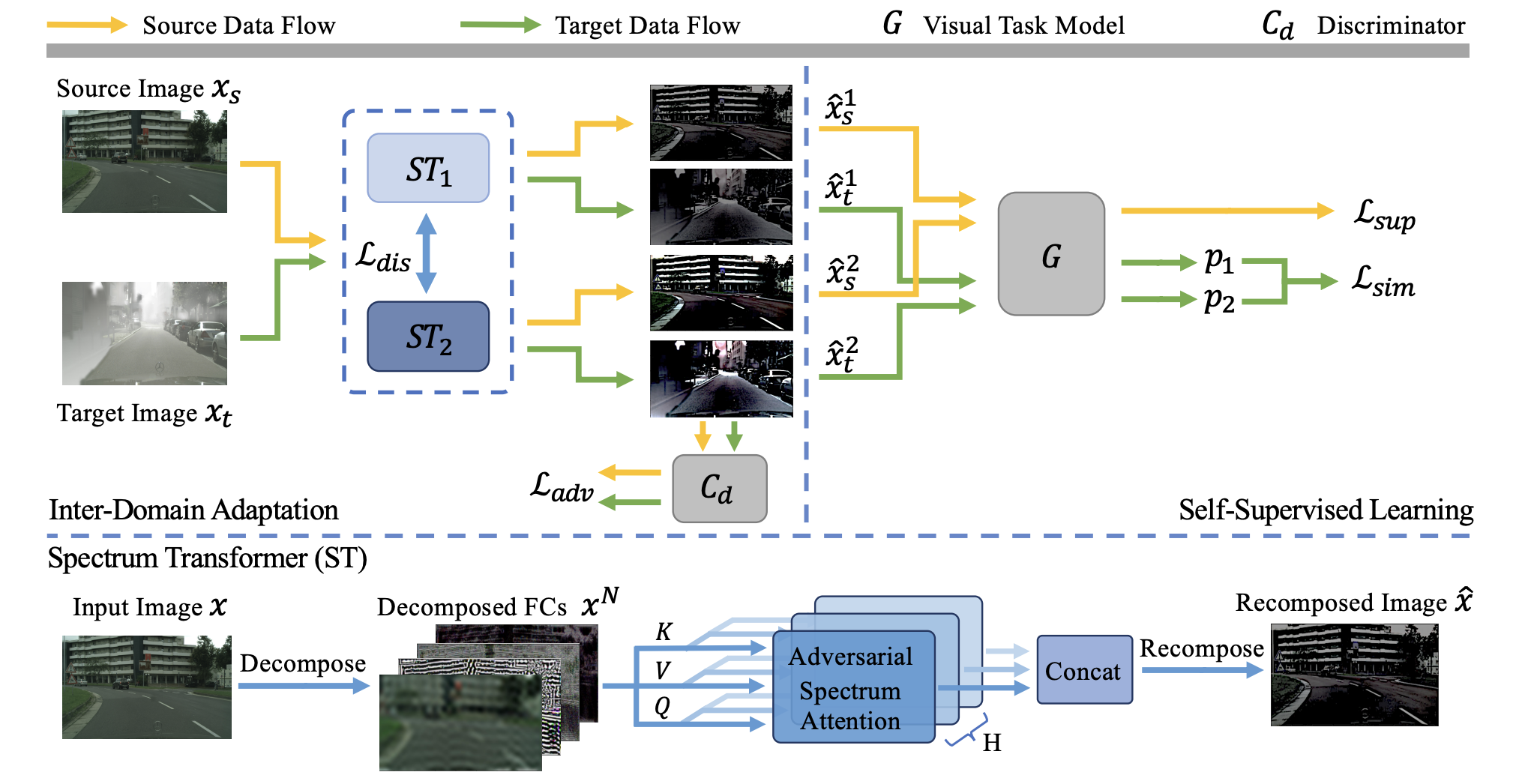

Spectral Unsupervised Domain Adaptation for Visual Recognition

Jingyi Zhang, Jiaxing Huang, Zichen Tian, Shijian Lu

CVPR 2022

📖 Educations

- 2022.01 - 2025.08, Doctor of Philosophy, College of Computing and Data Science, Nanyang Technological University (NTU).

- 2019.07 - 2020.06, Master of Science, School of Electrical and Electronic Engineering, Nanyang Technological University (NTU).

- 2015.09 - 2019.06, Bachelor of Science, School of Electronic Information Science and Technology, University of Electronic Science and Technology of China (UESTC).

💻 Service

Conference reviewer: NeurIPS, ICLR, ICML, CVPR, ICCV, ECCV, AAAI, BMCV, WACV

Journal Reviewer: TPAMI, IJCV, TCSVT, TMM, TETCI, Nerual Networks

🏆 Award

- Outstanding Reviewers, WACV 2025.